Operating Kubernetes clusters, although powerful, can be a complex and sometimes repetitive task. The vast array of commands, parameters and the need to constantly consult documentation can impact the agility of developers and DevOps engineers. This is where Artificial Intelligence comes in, promising to simplify this interaction.

In this article, I explore kubectl-ai, a plugin that seeks to translate the complexity of Kubernetes into natural language. I cover its advantages, the implications of using it and how you can start using it to optimize your day-to-day operations.

The challenge of day-to-day Kubernetes operation

Despite being the most popular container orchestration tool, Kubernetes has a steep learning curve and a command line interface ( kubectl) with numerous options. This scenario leads to:

- Command complexity: constantly memorizing or consulting the exact syntax for specific tasks.

- Time spent researching: the need to search for documentation for less frequent or specific commands.

- Potential for errors: small mistakes in typing or parameters can lead to unexpected behavior in the cluster.

- Entry barrier: hinders the productivity of new users or teams less familiar with the environment.

It’s worth noting that the points I’m raising here are challenges and justifications, and kubectl-ai presents itself as a promising solution to them.

What is kubectl-ai?

kubectl-ai is a plugin for kubectl designed to act as an intelligent assistant. Its aim is to simplify interaction with Kubernetes by allowing users to express their intentions in natural language, which the plugin then translates into executable kubectl commands.

Basically, it bridges the gap between the complexity of the command line and the intuition of human language, using Artificial Intelligence models to interpret your requests.

Advantages of kubectl-ai in Kubernetes operation

Adopting a tool like kubectl-ai can bring significant benefits to teams and professionals working with Kubernetes:

- Accelerated learning: newcomers to Kubernetes can familiarize themselves more quickly with commands and concepts, generating valid commands from simple descriptions.

- Increased productivity: reduces the time spent consulting documentation and trying and failing commands, allowing engineers to concentrate on more complex tasks.

- Reduction of operational errors: generating commands with correct syntax minimizes the chance of typing errors or incorrect parameters that could affect the environment.

- Simplifying complex tasks: helps build commands for more elaborate operations, making it easier to perform tasks that would otherwise be tedious or error-prone.

- Explanation of commands: in addition to generating, some implementations can explain what a particular

kubectlcommand does, deepening the user’s understanding.

Limitations and implications of using AI in CLI tools

Despite its advantages, it is crucial to understand the limitations and implications of using AI in operational tools such as kubectl-ai:

- AI accuracy: AI’s interpretation of natural language is not perfect. The command generated may not be exactly what the user intended, requiring human review before execution. AI may not capture nuances or the full context of the operation.

- Dependency on Language Models (LLMs):

kubectl-ai‘s functionality depends on integration with external LLMs (such as OpenAI’s). This implies:Polylang placeholder do not modify

- Security and privacy concerns:

- Sending data: by describing your intent in natural language, you may inadvertently send sensitive information about your cluster, your applications or your data to the external AI service. It is vital to be aware of what is being sent and of the LLM provider’s privacy terms.

- Permissions: the generated command may have broad permissions or perform actions that the user did not intend, if it is not carefully reviewed.

- Environment-specific context: the AI may not be aware of your cluster’s specific configurations, internal security policies or your organization’s naming conventions.

How to start using kubectl-ai

To try out kubectl-ai, you’ll need a configured Kubernetes cluster and an API key for an LLM (such as from OpenAI).

By default, kubectl-aiuses Gemini as its language model, but you can use other models. Follow the documentation for more details(here).

Prerequisites

kubectlinstalled and configured to access your Kubernetes cluster.- An API key from a compatible LLM provider (e.g. OpenAI API Key or Gemini API).

Plugin installation

Installation is usually done via krew, the plugin manager for kubectl:

kubectl krew install aiIf you don’t have krew, follow the installation instructions at krew.sigs.k8s.io.

API Key Configuration

Export your API key as an environment variable. In my case, I used a Gemini API key that you can get through AI Studio:

export GEMINI_API_KEY="CHAVE_AQUI"Or follow the plugin’s documentation for other ways of configuring credentials.

Examples of use

Now you can start interacting:

# Gerar um comando:

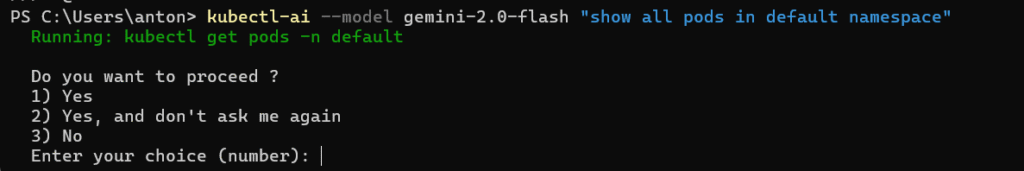

kubectl-ai "show all pods in default namespace"

# Saída esperada: kubectl get pods -n default

# Escalar um deployment:

kubectl-ai "scale deployment my-app to 3 replicas"

# Saída esperada: kubectl scale deployment my-app --replicas=3

# Obter logs:

kubectl-ai "get logs from pod my-pod-12345-abcde"

# Saída esperada: kubectl logs my-pod-12345-abcde

kubectl-ai command is returning the command to be executed for approval.Conclusion

kubectl-ai represents an interesting step in the evolution of operations tools, bringing the promise of simplifying interaction with complex systems like Kubernetes through Artificial Intelligence. For DevOps Engineers and Cloud Architects, this tool can be a valuable productivity and learning accelerator.

However, like any emerging technology, its use requires discernment. Combining the speed of AI with human capacity is the safest and most effective way forward. Use kubectl-ai to optimize, but always review and validate the commands and security implications. The future of cloud operation is increasingly intelligent, and staying ahead of the learning curve is key.