When we think about artificial intelligence in software development, the conversation often falls into one of two extremes: either AI is seen as the ultimate solution to all our problems, delivering perfect code in seconds, or as a looming threat that will soon make our jobs obsolete. I believe the truth, as in most things in life, lies somewhere in between.

At its core, AI is not a replacement. It’s a high-level assistant, a capacity-boosting tool. It is a co-creator, not the final author of the work. And with that mindset, our commitment to quality becomes even more vital.

Productivity and Co-Creation in Practice

Tools like ChatGPT are true accelerators for our workflow. They can help us generate boilerplate code, debug problematic snippets, or even translate routines from one language to another. Productivity can increase drastically, freeing us from repetitive and mechanical tasks.

To be completely honest, I use artificial intelligence daily. Instead of treating it as just a code generator, I see it as both a strategic consultant and a tactical assistant. I use Gemini in a consultative way to get a broader perspective on my day-to-day needs, such as crafting value propositions for clients or structuring high-quality materials for my business—like this very post.

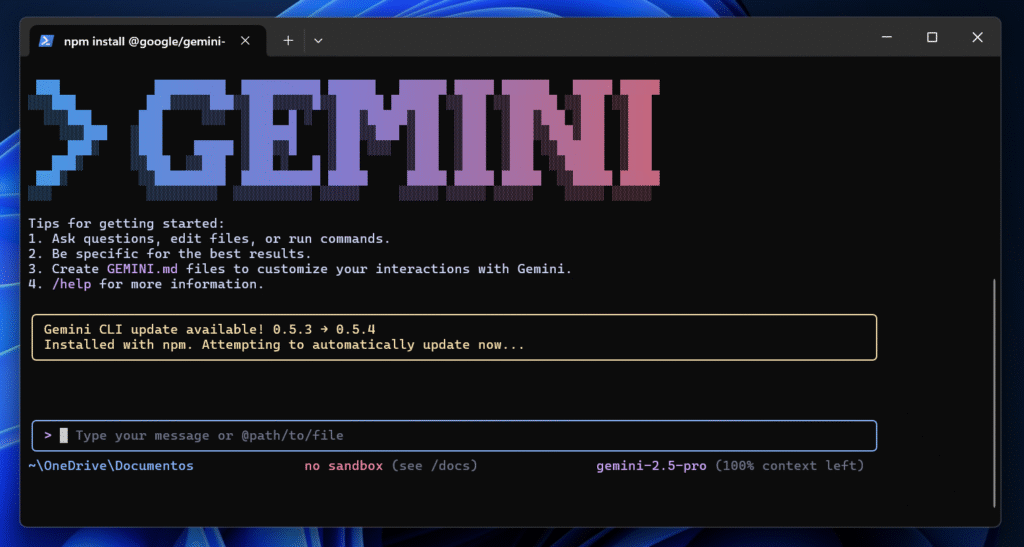

On the more technical side, I use Gemini CLI to assist me directly in the terminal and in development, speeding up tasks and providing quick insights. It’s living proof that AI doesn’t eliminate our role, but gives us superpowers to become more efficient.

Mastering Collaboration: Tools and Skills

For co-creation to succeed, developers must master new skills and processes. The quality of our collaboration with AI will ultimately determine the quality of our work.

Prompt Engineering: The New Developer Skill

If AI is the engine, the “prompt” is the fuel. The quality of the output we get from tools like Gemini is directly proportional to the clarity and context we provide. Mastering the art of building effective prompts—“prompt engineering”—is the new language of a co-creator.

- Be a Context Architect: Provide clear and detailed instructions. Define a “role” for the AI (e.g., “Act as a cloud security expert”) and specify the expected format of the output.

- Reasoning Techniques: For complex problems, apply advanced techniques. Ask the AI to break solutions into steps (Chain-of-Thought) or to ask clarifying questions before producing code (Question-First Mode). This ensures alignment with your true intent.

The Art of Validating AI-Generated Code

AI can deliver a draft of code in seconds, but the responsibility of ensuring its quality, security, and alignment with the project’s architecture remains ours. The developer’s role becomes that of a meticulous reviewer and careful architect who does not blindly trust machine outputs.

- Think Like a Hacker: Look at code not just for functionality, but for vulnerabilities. AI-generated code can sometimes introduce subtle flaws that open doors for attacks.

- Optimization is Key: AI outputs tend to be generic. It’s our job to refine and optimize them to ensure efficiency, scalability, and adherence to best practices.

- Testing is Non-Negotiable: Regardless of its origin, thorough unit and integration testing remain the ultimate guarantee that the system will behave as expected in production.

Consequences and the Non-Negotiable Commitment

The speed AI brings to development cannot overshadow the fundamental responsibility of security. Using AI without an unwavering commitment to code review and quality assurance opens a window of opportunity for cybercrime. AI-generated code, if not carefully inspected, may contain subtle vulnerabilities invisible at first glance but exploitable by skilled attackers.

The developer, therefore, remains the guardian of security. Cybersecurity culture cannot be outsourced to a machine. Our commitment to software quality includes rigorous human audits, a critical eye for every line of code, and a proactive mindset to identify and fix vulnerabilities.

Another consequence often overlooked in our pursuit of productivity is the atrophy of our reasoning skills. Over-reliance on AI and the convenience it provides can lead us to a dangerous point: blindly trusting its responses. By simply accepting AI’s solutions without questioning, we risk diminishing our ability to process diverse information, generate creative insights, and exercise logical thinking and problem-solving. In the end, the very tool designed to help us can end up limiting us to its own knowledge.

AI is a powerful tool to give us a draft, but refining, validating, and ensuring that draft becomes a robust, secure, and functional solution is still our job. The era of co-creation is here, and with it, our role evolves to what truly matters: strategy, critical thinking, and the relentless pursuit of excellence.